|

Author: David Masri Founder & CEO |

Salesforce triggers need to be coded in such a way as to be able to process batches of data. This process is referred to as "bulkifying" the trigger. If a Salesforce trigger is not properly bulkified, anytime we push a batch with more than one record, it will fail. In addition, with bulkified triggers, if one record fails, the entire batch may fail, so every record in the batch will fail each with an obscure error message. This is usually because of some aggregation done within the trigger.

For Example: Suppose we have a (before update) trigger on the Contact object that is properly bulkified. The trigger takes all the contacts in a batch, groups them by account, does some math to calculate a data point, and then does a single update to the Account record. If even one of the contact updates fails (because of an invalid e-mail address or some validation rule), the entire batch fails with generic error messages. This makes sense because the trigger can't calculate the Account level data point – it's all or nothing!

This is a nightmare for the people who monitor the row-level error logs! They have 200 records in the error log and no idea which record is the bad one or what is wrong with it! The general consensus online is to set the batch size to one, this way you always know exactly what record failed, and Salesforce can issue proper error messages. The problem with this approach is the abysmal performance and abuse of your API limits.

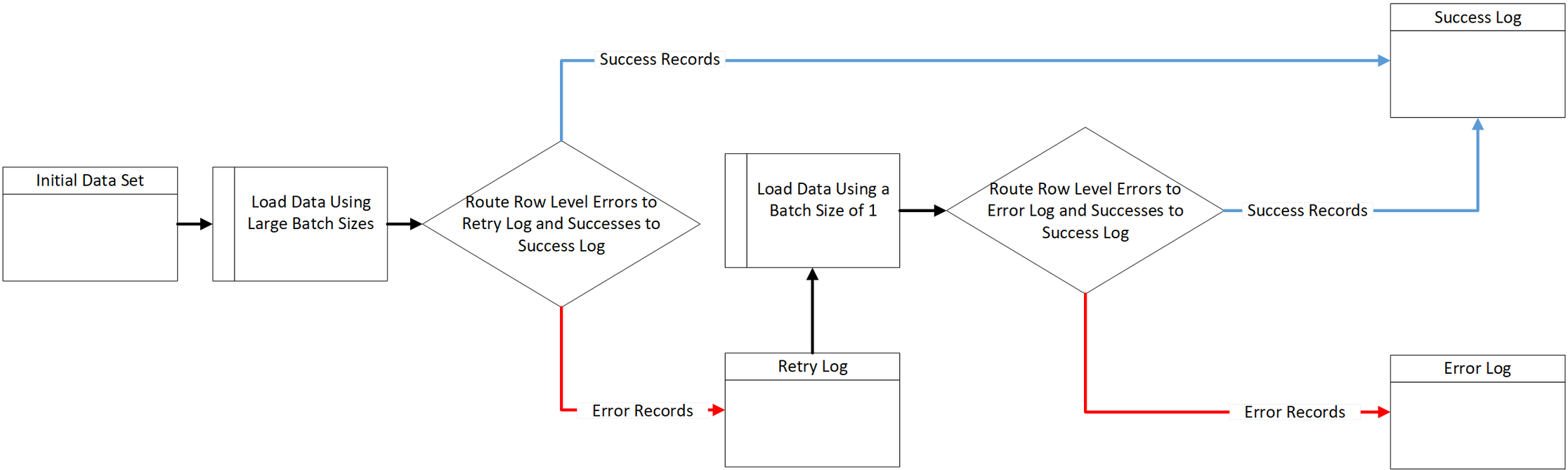

I have an alternate approach, I recommend that you process the records twice-once normally with proper batch sizes, catch the errors into a retry log, then reprocess only the errors with a batch size of one. This way, we get the good performance of using large batch sizes, and at the same time only records with real errors are not processed and are logged with a proper error message, making it easy to identify and fix the issues.

I have diagrammed this process out for you below:

This article is adapted from my book: Developing Data Migrations and Integrations with Salesforce.

Have a question you would like to as see a part of my FAQ blog series? Email it to me! Dave@Gluon.Digital